Optimizing Deep Learning AI Models with Optimization by PROmpting (OPRO)

Artificial Intelligence (AI) has taken great leaps forward in recent years, but there are still obstacles to overcome in handling real-world applications. That's why researchers from DeepMind have introduced a groundbreaking approach called Optimization by PROmpting (OPRO). In this blog post, we'll dive deep into the details of OPRO and explore its potential implications for the future of AI programming.

What is Optimization by PROmpting (OPRO)?

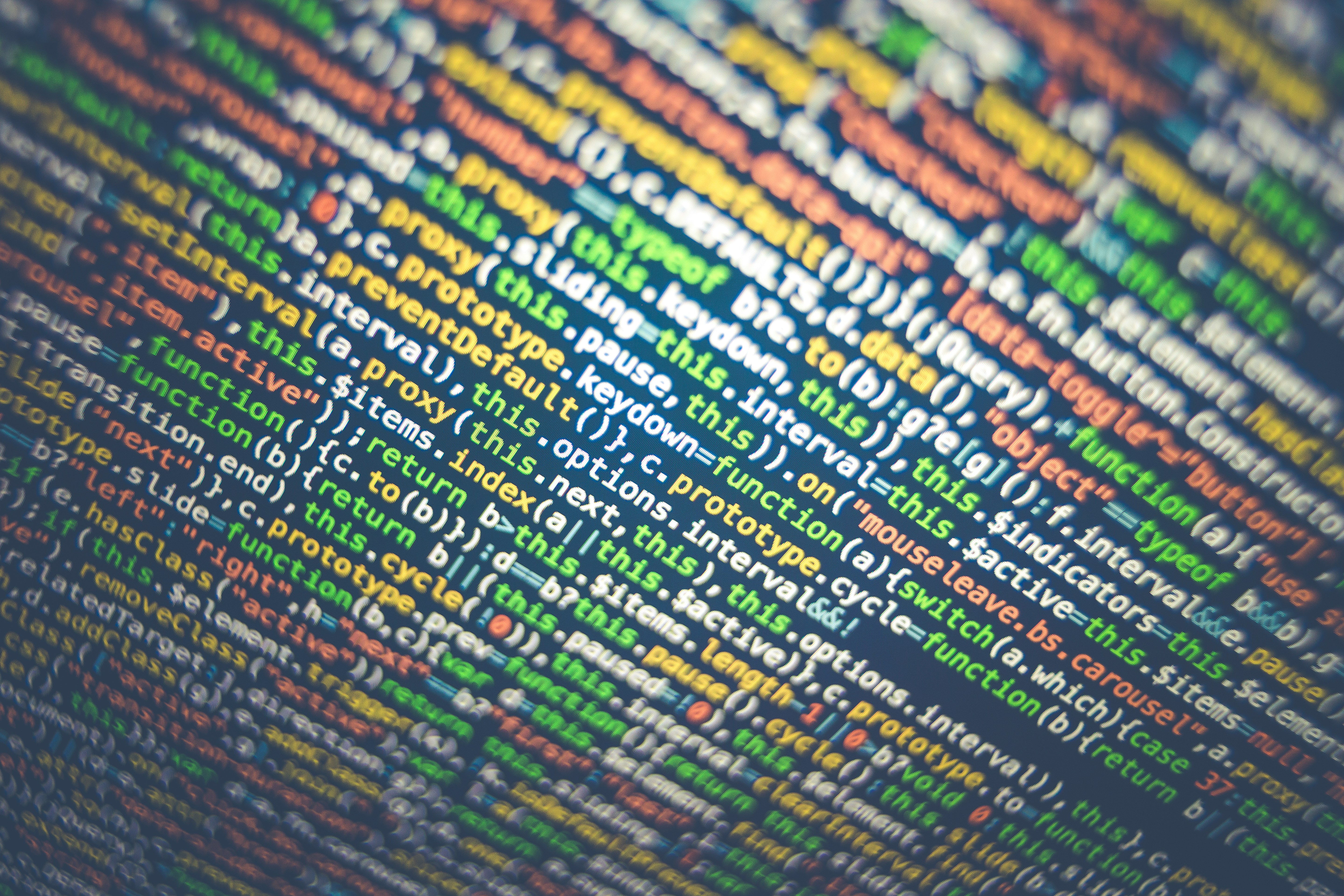

OPRO is a groundbreaking approach to optimization that leverages large language models (LLMs) to generate solutions based on natural language problem descriptions and previous solutions.

- How does OPRO optimize LLM prompts for accuracy? OPRO enables users to specify target metrics and provide additional instructions to guide the optimization process. This allows for tailoring AI models to specific requirements and problem domains.

- What are the future prospects for OPRO? While OPRO presents a systematic approach to finding the optimal LLM prompt, further testing is needed to evaluate its performance in real-world applications. Researchers and developers will continue to refine and expand upon OPRO to unlock its full potential in addressing complex real-world problems.

💡 OPRO addresses the limitations of derivative-based optimizers: Derivative-based optimizers have proven effective for many AI applications. However, they struggle to handle the complexities of real-world problems. OPRO offers a promising alternative, leveraging large language models (LLMs) to generate solutions based on natural language problem descriptions and previous solutions.

💡 OPRO enables effective solutions through natural language prompts: By defining optimization problems in natural language, OPRO empowers LLMs like OpenAI's ChatGPT and Google's PaLM to generate effective solutions. In some cases, these solutions have matched or even surpassed expert-designed heuristic algorithms in small-scale optimization problems.

💡 Optimizing LLM prompts for accuracy: OPRO goes beyond generating solutions; it optimizes LLM prompts to maximize accuracy. Users can specify target metrics and provide additional instructions to guide the optimization process. This opens up new possibilities for tailoring AI models to specific requirements and problem domains.

💡 The impact of prompt engineering on model output: Prompt engineering plays a crucial role in OPRO's effectiveness. Semantically similar prompts can yield different results, highlighting the need for careful consideration when designing prompts. Small changes in wording or instruction can have significant effects on the AI model's output.

💡 OPRO's compatibility with popular LLMs: OPRO is compatible with LLMs like OpenAI's ChatGPT and Google's PaLM. This allows developers to leverage these powerful models in real-world applications and gradually improve task accuracy through the optimization process. OPRO facilitates systematic exploration of possible LLM prompts to find the most effective one for a specific type of problem.

💡 Avoiding anthropomorphization of LLM behavior: While LLMs can achieve impressive results, it's important to remember that their behavior varies depending on the prompt. Anthropomorphizing these AI models can lead to unrealistic expectations and misinterpretations of their capabilities. OPRO serves as a valuable reminder of the limitations and unique characteristics of AI systems.

💡 Challenges and future prospects: While OPRO presents a systematic approach to finding the optimal LLM prompt, further testing is needed to evaluate its performance in real-world applications. Researchers and developers will continue to refine and expand upon OPRO to unlock its full potential in addressing complex real-world problems.

Optimization by PROmpting (OPRO) represents an exciting advancement in AI programming. By harnessing the power of natural language prompts and optimizing LLM output, OPRO opens up new possibilities for effectively solving real-world optimization problems. As we continue to explore the potential of AI, it's essential to approach these advancements with both excitement and caution, recognizing the unique characteristics and limitations of these intelligent systems.